我们知道HashMap拥有高读取性能,the hash-map has O(1) access with high probability (注意是最理想情况是O(1),但是相比O(n)更接近O(1))。高读取性能数据结构背后具体是怎么实现的呢,这篇笔记就一起来总结下。

缘起

数据结构中有数组和链表来实现对数据的存储,但这两者基本上是两个极端。

数组

数组存储区间是连续的,占用内存严重,故空间复杂的很大。但数组的二分查找时间复杂度小,为O(1);数组的特点是:寻址容易,插入和删除困难;

链表

链表存储区间离散,占用内存比较宽松,故空间复杂度很小,但时间复杂度很大,达O(N)。链表的特点是:寻址困难,插入和删除容易。

哈希表

那么我们能不能综合两者的特性,做出一种寻址容易,插入删除也容易的数据结构?答案是肯定的,这就是我们要提起的哈希表。哈希表((Hash table)既满足了数据的查找方便,同时不占用太多的内容空间,使用也十分方便。

在一个随机性良好的hash函数的情况下,hash模型基本和以下问题一致: 把k个球随机放入n个盒子,碰撞次数定义为有球的总数k减去有球的盒的个数(当然,不同存储结构有所不同)。

碰撞的概率还跟当前空间中已经被映射的点的数量有关,存入hash的元素越多,碰撞概率就越大。给定k个球,随机放入n个盒子,在相同盒子里面的球的期望个数也会增大。

hash方法

元素特征转变为数组下标的方法就是散列法。散列法当然不止一种,下面列出三种比较常用的:

1, 除法散列法 最直观的一种,上图使用的就是这种散列法,公式: index = value % 16 学过汇编的都知道,求模数其实是通过一个除法运算得到的,所以叫“除法散列法”。

2, 平方散列法 求index是非常频繁的操作,而乘法的运算要比除法来得省时(对现在的CPU来说,估计我们感觉不出来),所以我们考虑把除法换成乘法和一个位移操作。公式:

index = (value * value) >> 28 (右移,除以2^28。记法:左移变大,是乘。右移变小,是除。)

如果数值分配比较均匀的话这种方法能得到不错的结果,但我上面画的那个图的各个元素的值算出来的index都是0——非常失败。也许你还有个问题,value如果很大,value * value不会溢出吗?答案是会的,但我们这个乘法不关心溢出,因为我们根本不是为了获取相乘结果,而是为了获取index。

3, 斐波那契(Fibonacci)散列法

平方散列法的缺点是显而易见的,所以我们能不能找出一个理想的乘数,而不是拿value本身当作乘数呢?答案是肯定的。

- 对于16位整数而言,这个乘数是40503

- 对于32位整数而言,这个乘数是2654435769

- 对于64位整数而言,这个乘数是11400714819323198485

这几个“理想乘数”是如何得出来的呢?这跟一个法则有关,叫黄金分割法则,而描述黄金分割法则的最经典表达式无疑就是著名的斐波那契数列,即如此形式的序列:0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181, 6765, 10946,…。另外,斐波那契数列的值和太阳系八大行星的轨道半径的比例出奇吻合。

对我们常见的32位整数而言,公式:

index = (value * 2654435769) >> 28

hashcode() and equals() 方法

由于HashMap的实现又依赖于hashcode() and equals()方法,先根据一个博客来整理下这两个继承于Object的方法。参考原文地址:http://www.java2blog.com/2014/02/hashcode-and-equals-method-in-java.html

These methods can be found in the Object class and hence available to all java classes. Using these two methods, an object can be stored or retrieved from a Hashtable, HashMap or HashSet.

- hashcode():

You might know if you put entry in HashMap, first hashcode is calculated and this hashcode used to find bucket(index) where this entry will get stored in hashMap.You can read more at How hashMap works in java. What if you don’t override hashcode method, it will return integer representation of memory address. 如果不重写的话,hashcode方法会返回类实例的内存地址的整形值。

Country类重写例子:

1 2 3 4 5 6 7 | |

- equals():

The equals method implements an equivalence relation on non-null object references. Read more at http://www.java2blog.com/2014/02/hashcode-and-equals-method-in-java.html#dXUX4kIP1gRjcrGt.99 You have to override equals method, when you want to define equality between two object. If you don’t override this method, it will check for reference equality(==) i.e. if tow reference refers to same object or not.

如果想比较两个对象,就必须重写equals方法。否则这个方法默认是比较实例对象的堆引用,等同于 “==” 必然就会导致不同。所以可以推测String类一定重写了equals方法。

Country类重写例子:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Key points to remember:

- If you are overriding equals method then you should override hashcode() also.

- If two objects are equal then they must have same hashcode.

- If two objects have same hashcode then they may or may not be equal

- Always use same attributes to generate equals and hashcode as in our case we have used name.

总结来说,两个对象相等他们一定拥有相同的hashcode,但是相同的邀请码并不能说对象也相等。

HashMap的结构Java实现

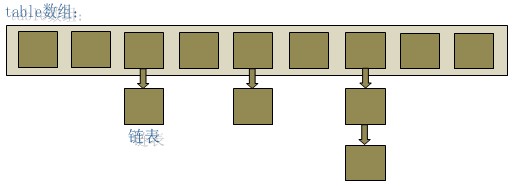

在java中,最基本的结构就是两种,一个是数组,另外一个是模拟指针(引用),所有的数据结构都可以用这两个基本结构来构造的,HashMap也不例外。HashMap实际上是一个“链表散列”的数据结构,即数组和链表的结合体。

Basically, a hash table is an array containing all of the keys to search on. The position of each key in the array is determined by the hash function, which can be any function which always maps the same input to the same output.

哈希表hashmap<key, value>就是一个数组装着所有的key. 这些key的位置是通过Object类的hash function来判断的,hash function会把同样的输入映射到同样的输出。

So when we insert something into the hash table, we use the hash function (let’s call it h) to find the location where to put it, and put it there. Now we insert another thing, hashing and storing. And another. Each time we insert data, it takes O(1) time to insert it (since the hash function is O(1).

插入时候性能是O(1)。

Looking up data is the same. If we want to find a value, x, we have only to find out h(x), which tells us where x is located in the hash table. So we can look up any hash value in O(1) as well.

查询也是O(1)。

Now to the lie: The above is a very nice theory with one problem: what if we insert data and there is already something in that position of the array? There is nothing which guarantees that the hash function won’t produce the same output for two different inputs (unless you have a perfect hash function, but those are tricky to produce). Therefore, when we insert we need to take one of two strategies:

但是在显示中我们很难有完美的Hash函数保证没有碰撞的,即不同的输入hash后的输出一样。为了解决这个问题一般用下面两种方案:

- Store multiple values at each spot in the array (say, each array slot has a linked list). Now when you do a lookup, it is still O(1) to arrive at the correct place in the array, but potentially a linear search down a (hopefully short) linked list. This is called “separate chaining”.

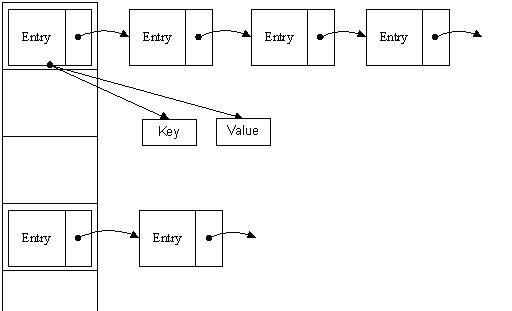

每个数组里面存一个linked list,当查询key的位置的时候还是O(1),但是可能会有碰撞的值,要在查找这个linked list。这个是最常用的一种方法—— 拉链法,我们可以理解为“链表的数组”。

1 2 3 4 5 6 7 8 9 10 11 | |

可以看出,Entry就是数组中的元素,每个 Map.Entry 其实就是一个key-value对,它持有一个指向下一个元素的引用,这就构成了链表。

- If you find something is already there, hash again and find another location. Repeat until you find an empty spot, and put it there. The lookup procedure can follow the same rules to find the data. Now it’s still O(1) to get to the first location, but there is a potentially (hopefully short) linear search to bounce around the table till you find the data you are after. This is called “open addressing”.

领一种方法就不细分析,原理是如果hash碰撞了就再hash一下,意思是hash的hash来得到其他的地址。

Basically, both approaches are still mostly O(1) but with a hopefully-short linear sequence. We can assume for most purposes that it is O(1). If the hash table is getting too full, those linear searches can become longer and longer, and then it is time to “re-hash” which means to create a new hash table of a much bigger size and insert all the data back into it.

如果Hash表快满的时候,插入和查找性能就会变差,因为碰撞会变多。这个时候(假如默认数组是16长度,那么插满0.75*16=12的时候就触发)就要resize整个hash表,就是扩大数组,把原来的Entry都重新hash一遍。非常消耗性能。

参考链接

http://docs.oracle.com/javase/7/docs/api/java/util/HashMap.html

http://stackoverflow.com/questions/4363539/how-does-hashing-have-an-o1-search-time